Working with Pagination

Learn how to navigate through multiple pages and collect data from each page.

Understanding Pagination

Many websites split their content across multiple pages to improve loading times and user experience. When scraping data, you often need to navigate through all these pages to get the complete dataset. This tutorial will teach you how to handle different pagination types in Scrapify.

Types of Pagination

Before we start, let's understand the common pagination patterns you'll encounter:

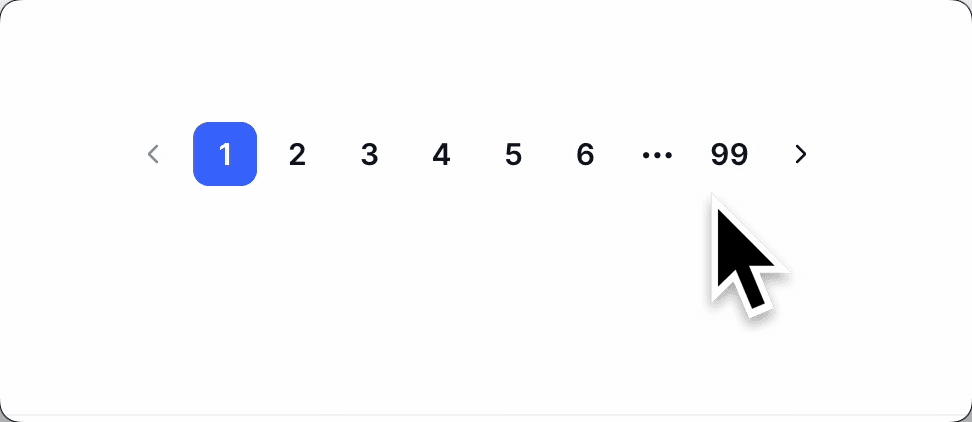

1. Numbered Pagination

The most traditional format with page numbers (1, 2, 3...) and sometimes "Next" and "Previous" buttons.

2. "Load More" Buttons

A single button that loads additional content on the same page when clicked.

3. Infinite Scroll

Content automatically loads as the user scrolls down the page.

4. URL Parameter Pagination

Pages are accessed by changing a parameter in the URL (e.g., ?page=1, ?page=2).

Pro Tip

Before setting up pagination, always explore the website manually to understand its pagination mechanism. This will help you choose the right approach in Scrapify.

Setting Up a Basic Pagination Scraper

Let's start with the most common scenario: numbered pagination with "Next" buttons.

Step 1: Create Your Initial Crawler

- Set up a new project in Scrapify and select "Crawler" as the extraction type

- Navigate to the first page of the content you want to scrape

- In the crawler configurator, add a "Scrape" action to extract the data elements you need (product names, prices, etc.)

- Test your extraction to ensure the correct data is being selected

Step 2: Add a Click Action for Pagination

- After your "Scrape" action, add a "Click Button" action

- Using the Scrapify selector tool, click on the "Next" or "→" button

- The tool will automatically generate an XPath selector for this button

- Confirm the action to add it to your configuration

Step 3: Create a Loop for Multiple Pages

- Add a "Loop" action at the beginning of your configuration

- Set the loop type to "Fixed List" if you know exactly how many pages to scrape

- Alternatively, use "Variable List" if the number of pages may vary

- Specify the maximum number of iterations (e.g., 10 pages)

- Nest your Scrape and Click Button actions inside this Loop

Step 4: Add a Wait Action

- After the Click Button action but before the next Scrape, add a "Wait" action

- Configure the duration (recommended: 3-5 seconds)

- This ensures the page has time to load before the next scrape attempt

Important Note

Always set a reasonable maximum iteration limit and wait times between actions to avoid overloading websites. This is not only ethical but also prevents your IP from being blocked.

Handling URL Parameter Pagination

For websites that use URL parameters for pagination (e.g., ?page=1, ?page=2):

Step 1: Identify the URL Pattern

- Navigate through a few pages manually and observe how the URL changes

- Identify the parameter that changes (often "page," "p," or "offset")

- Note the starting value and how it increments

Step 2: Configure URL List Loop

- Create a list of URLs with the changing parameter values

- In the crawler configurator, add a "Loop" action

- Set the loop type to "URL List"

- Enter the list of URLs you want to scrape

Step 3: Add Scrape Action Inside the Loop

Inside the URL List Loop, add a Scrape action to extract data from each URL in the list. The crawler will automatically navigate to each URL and perform the scrape.

Working with "Load More" Buttons

For websites that use "Load More" buttons to append content to the existing page:

Step 1: Set Up the Loop Structure

- Add a "Loop" action with type "Fixed List" or "Single Element"

- Set the number of iterations based on how many times you need to click "Load More"

- Inside the loop, add the Scrape action first to capture the initial content

Step 2: Add Click and Wait Actions

- After the Scrape action, add a "Click Button" action targeting the "Load More" button

- Add a "Wait" action (recommended: 3-5 seconds) to allow new content to load

- With this setup, the Scrape action will extract all content, including newly loaded items, on each iteration

Pro Tip

When using the "Similar Elements" feature in your Scrape action, Scrapify can automatically identify and extract all instances of your selected data, including newly loaded content.

Handling Infinite Scroll

For websites that automatically load more content as you scroll down:

Step 1: Configure a Loop with Scroll Actions

- Add a "Loop" action with type "Fixed List"

- Set the number of iterations based on how many times you want to scroll

- Inside the loop, start with a Scrape action to capture visible content

Step 2: Add Scroll and Wait Actions

- After the Scrape action, add a "Scroll to Bottom" action or "Scroll to Element" action

- Add a "Wait" action (5-10 seconds) to give the page time to load new content

- On each loop iteration, Scrapify will scroll down and scrape the newly loaded content

Advanced Pagination Techniques

Combining Multiple Pagination Methods

Some websites use a combination of pagination methods. For example, clicking through numbered pages, then handling a "Load More" button on each page. In Scrapify, you can configure nested loops:

- Create an outer "Loop" action for the primary pagination (e.g., numbered pages)

- Inside this loop, add your Scrape action to capture initial content

- Add a nested "Loop" action for the secondary action (e.g., clicking "Load More")

- Configure each loop with the appropriate number of iterations

- Add appropriate Wait actions between interactions to ensure content loads properly

Conditional Pagination

Sometimes you need to stop pagination based on certain conditions. In Scrapify crawler configurations:

- You can use a "Variable List" loop type which stops automatically when the "Next" button disappears

- For more complex scenarios, create a specific extraction routine for the condition you're checking

- Set the maximum iterations to a high number, allowing your crawler to continue until the condition is met

- Alternatively, manually inspect the website to determine the exact number of pages and use a "Fixed List" loop

Handling Pagination Errors

To make your crawler more robust:

- Add Wait actions with sufficient duration to ensure pages load completely

- Add error handling through Scrapify's retry settings in your project configuration

- Consider using multiple crawler variations to handle different pagination scenarios

- Use URL List loops with manually verified URLs for the most reliable extraction

Real-World Example: E-commerce Category Scraper

Let's walk through setting up a crawler for an e-commerce category page:

- Create a Loop action with type "Fixed List" and set iterations to 20 (to crawl 20 pages)

- Inside the loop, add a Scrape action to extract product names, prices, and ratings

- Enable "Similar Elements" in the Scrape action to automatically detect all products on the page

- After the Scrape, add a Click Button action targeting the "Next" button

- Add a Wait action of 5 seconds to ensure the next page loads completely

- Run your crawler and monitor the first few pages to verify extraction quality

- Adjust actions as needed to ensure reliable data extraction across all pages

Important Note

When crawling multiple pages, your dataset can become large. Use Scrapify's export features to save your data in your preferred format (CSV, JSON, etc.) and consider incremental crawling for very large websites.

Troubleshooting Common Pagination Issues

If you encounter problems with pagination:

- Crawler stops prematurely: Check if your loop iterations are set too low or if the "Next" button selector no longer works on some pages

- Duplicate data: Ensure your Scrape action is properly configured to extract unique elements on each page

- Missing data: Increase Wait duration to ensure pages fully load before scraping

- Slow performance: Optimize your crawler by only extracting necessary data and using appropriate wait times

- Getting blocked: Increase delays between actions and consider using Scrapify's IP rotation features (Enterprise plan)

Conclusion

With Scrapify's crawler configurator, you can extract data from websites with any type of pagination system by creating sequences of actions within loops. Remember to always crawl responsibly with reasonable delays and iteration limits to avoid overloading websites.

In our next tutorial, we'll cover how to organize and export your crawled data to make it more usable for analysis.